Service Orchestrator¶

The service orchestrator is a component that is added to your inference graph, as a sidecar container. Its main responsibilities are:

Correctly manage the request / response paths described by your inference graph.

Expose Prometheus metrics.

Provide Tracing via Open Tracing.

Add CloudEvent-based payload logging.

From Seldon Core >=1.1, the service orchestrator allows you to specify the

protocol for the data plane of your inference graph.

At present, we support the following protocols:

Protocol |

|

Reference |

|---|---|---|

Seldon |

|

|

Tensorflow |

|

|

V2 |

|

These protocols are supported by some of our pre-packaged servers out of the box. You can check their documentation for more details.

Additionally, you can see basic examples for all options in the protocol examples notebook.

Design¶

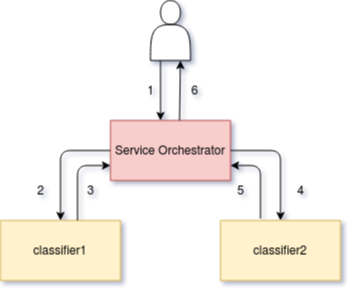

The service orchestrator’s core concern is to manage the request/response flow of calls through the defined inference graph. Given a graph shown below:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: fixed

spec:

name: fixed

protocol: seldon

transport: rest

predictors:

- componentSpecs:

- spec:

containers:

- image: seldonio/fixed-model:0.1

name: classifier1

- image: seldonio/fixed-model:0.1

name: classifier2

graph:

name: classifier1

type: MODEL

children:

- name: classifier2

type: MODEL

name: default

replicas: 1

The service orchestrator component is added to the graph and manages the request flow as shown below:

The initial request (1) reaches the service orchestrator which forwards it to the first model (2) and the response is captured by the service orchestrator (3) which then forwards to second model (4) before the response is again captured by service orchestrator (5) before being returned to caller (6).

For more complex inference graphs the service orchestrator will handle routing components which may decide which of a subset of child components to send the request or aggregation components to combine responses from multiple components.

Resource Requests/Limits for Service Orchestrator¶

You can set custom resource request and limits for this component by specifying

them in a svcOrchSpec section in your Seldon Deployment.

An example is shown below to set the engine cpu and memory requests:

{

"apiVersion": "machinelearning.seldon.io/v1alpha2",

"kind": "SeldonDeployment",

"metadata": {

"name": "svcorch"

},

"spec": {

"name": "resources",

"predictors": [

{

"componentSpecs": [

{

"spec": {

"containers": [

{

"image": "seldonio/mock_classifier:1.0",

"name": "classifier"

}

]

}

}

],

"graph": {

"children": [],

"name": "classifier",

"type": "MODEL",

"endpoint": {

"type": "REST"

}

},

"svcOrchSpec": {

"resources": {

"requests": {

"cpu": "1",

"memory": "3Gi"

}

}

},

"name": "release-name",

"replicas": 1

}

]

}

}

Bypass Service Orchestrator (version >= 0.5.0)¶

If you are deploying a single model then for those wishing to minimize the latency and resource usage for their deployed model you can opt out of having the service orchestrator included. To do this add the annotation seldon.io/no-engine: "true" to the predictor. The predictor must contain just a single node graph. An example is shown below:

apiVersion: machinelearning.seldon.io/v1alpha2

kind: SeldonDeployment

metadata:

labels:

app: seldon

name: noengine

spec:

name: noeng

predictors:

- annotations:

seldon.io/no-engine: "true"

componentSpecs:

- spec:

containers:

- image: seldonio/mock_classifier_rest:1.3

name: classifier

graph:

children: []

endpoint:

type: REST

name: classifier

type: MODEL

name: noeng

replicas: 1

In these cases the external API requests will be sent directly to your model. At present only the python wrapper (>=0.13-SNAPSHOT) has been modified to allow this.

Note no metrics or extra data will be added to the request so this would need to be done by your model itself if needed.

Routing Metadata Injection¶

For performance reasons, by default the service orchestrator will only forward the request payload, without trying to de-serialise it. This may be a blocker for some use cases, like injecting routing metadata when placing routers in your inference graph. This metadata can be useful to indicate which routes were taken for each request.

This behaviour can be changed through the SELDON_ENABLE_ROUTING_INJECTION

environment variable of the orchestrator.

When this variable is enabled (under svcOrchSpec), the orchestrator will

interpret the request payload, and will inject this routing metadata for each

request.

You can see an example below on how this flag can be switched on:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: my-graph-with-routers

spec:

predictors:

- svcOrchSpec:

env:

- name: SELDON_ENABLE_ROUTING_INJECTION

value: 'true'

graph:

name: router

type: ROUTER

# ...

children:

- name: model-a

type: MODEL

# ...

- name: model-b

type: MODEL

# ...

name: default