Model Explainers¶

Seldon provides model explanations using its Alibi library.

We support explainers saved using python 3.7 in v1 explainer server. However, for v2 protocol (using MLServer) this is not a requirement anymore.

Package |

Version |

|---|---|

|

|

Available Methods¶

Seldon Core supports a subset of the methods currently available in Alibi. Presently this the following:

Method |

Explainer Key |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

Creating your explainer¶

For Alibi explainers that need to be trained you should

Use python 3.7 as the Seldon Alibi Explain Server also runs in python 3.7.10 when it loads your explainer.

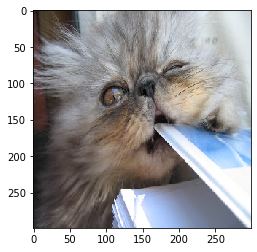

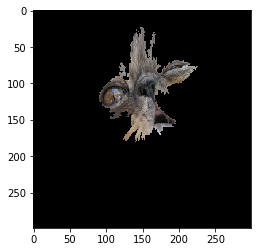

Follow the Alibi docs for your particular desired explainer. The Seldon Wrapper presently supports: Anchors (Tabular, Text and Image), KernelShap and Integrated Gradients.

Save your explainer using explainer.save method and store in the object store or PVC in your cluster. We support various cloud storage solutions through our init container.

The runtime environment in our Alibi Explain Server is locked using Poetry. See our e2e example here on how to use that definition to train your explainers.

V2 protocol for explainer using MLServer (incubating)¶

The support for v2 protocol is now handled with MLServer moving forward. This is experimental and only works for black-box explainers.

For an e2e example, please check AnchorTabular notebook here.

Explain API¶

For the Seldon Protocol an endpoint path will be exposed for:

http://<ingress-gateway>/seldon/<namespace>/<deployment name>/<predictor name>/api/v1.0/explain

So for example if you deployed:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: income

namespace: seldon

spec:

name: income

annotations:

seldon.io/rest-timeout: "100000"

predictors:

- graph:

children: []

implementation: SKLEARN_SERVER

modelUri: gs://seldon-models/sklearn/income/model-0.23.2

name: classifier

explainer:

type: AnchorTabular

modelUri: gs://seldon-models/sklearn/income/explainer-py36-0.5.2

name: default

replicas: 1

If you were port forwarding to Ambassador or istio on localhost:8003 then the API call would be:

http://localhost:8003/seldon/seldon/income-explainer/default/api/v1.0/explain

The explain method is also supported for tensorflow and v2 protocols. The full list of endpoint URIs is:

Protocol |

URI |

|---|---|

seldon |

|

tensorflow |

|

v2 |

|

Note: for tensorflow protocol we support similar non-standard extension as for the prediction API, http://<host>/<ingress-path>/v1/models/:explain.