This page was generated from examples/mnist_triton_e2e/mnist-triton.ipynb.

Tensorflow MNIST model and Triton (e2e example)¶

Prerequisites¶

A kubernetes cluster with kubectl configured

rclone

curl

Poetry (optional)

Setup Seldon Core¶

Use the setup notebook to Setup Cluster with Ambassador Ingress and Install Seldon Core. Instructions also online.

We will assume that ambassador (or Istio) ingress is port-forwarded to localhost:8003

Setup MinIO¶

Use the provided notebook to install Minio in your cluster. Instructions also online.

We will assume that MinIO service is port-forwarded to localhost:8090

[1]:

%%writefile rclone.conf

[s3]

type = s3

provider = minio

env_auth = false

access_key_id = admin@seldon.io

secret_access_key = 12341234

endpoint = http://localhost:8090

Overwriting rclone.conf

[2]:

%%writefile secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: seldon-rclone-secret

type: Opaque

stringData:

RCLONE_CONFIG_S3_TYPE: s3

RCLONE_CONFIG_S3_PROVIDER: minio

RCLONE_CONFIG_S3_ENV_AUTH: "false"

RCLONE_CONFIG_S3_ACCESS_KEY_ID: "admin@seldon.io"

RCLONE_CONFIG_S3_SECRET_ACCESS_KEY: "12341234"

RCLONE_CONFIG_S3_ENDPOINT: http://minio.minio-system.svc.cluster.local:9000

Overwriting secret.yaml

[3]:

!kubectl apply -f secret.yaml

secret/seldon-rclone-secret configured

Poetry (optional)¶

We will use poetry.lock to fully define the training environment. Install poetry following official documentation. Usually this goes down to

curl -sSL https://install.python-poetry.org | python3 - --version 1.1.15

Note: as runtime environment will be defined by the Triton Server image this step is optional. However, keeping the training environment fully reproducible is usually a right thing to do.

Train MNIST Model¶

Prepare training environment¶

[4]:

!conda create --yes --prefix ./venv python=3.8

Collecting package metadata (current_repodata.json): done

Solving environment: done

## Package Plan ##

environment location: /home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/venv

added / updated specs:

- conda-ecosystem-user-package-isolation

- python=3.8

The following NEW packages will be INSTALLED:

_libgcc_mutex conda-forge/linux-64::_libgcc_mutex-0.1-conda_forge

_openmp_mutex conda-forge/linux-64::_openmp_mutex-4.5-1_gnu

ca-certificates conda-forge/linux-64::ca-certificates-2021.10.8-ha878542_0

conda-ecosystem-u~ conda-forge/linux-64::conda-ecosystem-user-package-isolation-1.0-0

ld_impl_linux-64 conda-forge/linux-64::ld_impl_linux-64-2.36.1-hea4e1c9_2

libffi conda-forge/linux-64::libffi-3.4.2-h9c3ff4c_4

libgcc-ng conda-forge/linux-64::libgcc-ng-11.2.0-h1d223b6_11

libgomp conda-forge/linux-64::libgomp-11.2.0-h1d223b6_11

libnsl conda-forge/linux-64::libnsl-2.0.0-h7f98852_0

libstdcxx-ng conda-forge/linux-64::libstdcxx-ng-11.2.0-he4da1e4_11

libzlib conda-forge/linux-64::libzlib-1.2.11-h36c2ea0_1013

ncurses conda-forge/linux-64::ncurses-6.2-h58526e2_4

openssl conda-forge/linux-64::openssl-3.0.0-h7f98852_2

pip conda-forge/noarch::pip-21.3.1-pyhd8ed1ab_0

python conda-forge/linux-64::python-3.8.12-hf930737_2_cpython

python_abi conda-forge/linux-64::python_abi-3.8-2_cp38

readline conda-forge/linux-64::readline-8.1-h46c0cb4_0

setuptools conda-forge/linux-64::setuptools-58.5.3-py38h578d9bd_0

sqlite conda-forge/linux-64::sqlite-3.36.0-h9cd32fc_2

tk conda-forge/linux-64::tk-8.6.11-h27826a3_1

wheel conda-forge/noarch::wheel-0.37.0-pyhd8ed1ab_1

xz conda-forge/linux-64::xz-5.2.5-h516909a_1

zlib conda-forge/linux-64::zlib-1.2.11-h36c2ea0_1013

Preparing transaction: done

Verifying transaction: done

Executing transaction: done

#

# To activate this environment, use

#

# $ conda activate /home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/venv

#

# To deactivate an active environment, use

#

# $ conda deactivate

[5]:

%%writefile pyproject.toml

[tool.poetry]

name = "mnist_triton_e2e"

version = "0.1.0"

description = ""

authors = ["Seldon Technologies Ltd. <hello@seldon.io>"]

license = "Business Source License 1.1"

[tool.poetry.dependencies]

python = "~3.8"

tensorflow = "2.6.2"

[tool.poetry.dev-dependencies]

[build-system]

requires = ["poetry-core>=1.0.0"]

build-backend = "poetry.core.masonry.api"

Overwriting pyproject.toml

On first run of poetry install command it will create a poetry.lock file. Every next run of the installation command will use the package version from the lock file.

[6]:

%%bash

source ~/miniconda3/etc/profile.d/conda.sh

conda activate ./venv

poetry install

Installing dependencies from lock file

Package operations: 37 installs, 0 updates, 0 removals

• Installing certifi (2021.10.8)

• Installing charset-normalizer (2.0.7)

• Installing idna (3.3)

• Installing pyasn1 (0.4.8)

• Installing urllib3 (1.26.7)

• Installing cachetools (4.2.4)

• Installing oauthlib (3.1.1)

• Installing pyasn1-modules (0.2.8)

• Installing rsa (4.7.2)

• Installing requests (2.26.0)

• Installing six (1.15.0)

• Installing google-auth (1.35.0)

• Installing requests-oauthlib (1.3.0)

• Installing absl-py (0.15.0)

• Installing google-auth-oauthlib (0.4.6)

• Installing grpcio (1.41.1)

• Installing numpy (1.19.5)

• Installing tensorboard-data-server (0.6.1)

• Installing tensorboard-plugin-wit (1.8.0)

• Installing protobuf (3.19.1)

• Installing werkzeug (2.0.2)

• Installing markdown (3.3.4)

• Installing astunparse (1.6.3)

• Installing clang (5.0)

• Installing gast (0.4.0)

• Installing google-pasta (0.2.0)

• Installing keras (2.6.0)

• Installing keras-preprocessing (1.1.2)

• Installing opt-einsum (3.3.0)

• Installing tensorboard (2.6.0)

• Installing termcolor (1.1.0)

• Installing h5py (3.1.0)

• Installing flatbuffers (1.12)

• Installing typing-extensions (3.7.4.3)

• Installing tensorflow-estimator (2.6.0)

• Installing wrapt (1.12.1)

• Installing tensorflow (2.6.2)

Prepare Training Script¶

[7]:

%%writefile train.py

import numpy as np

np.random.seed(123) # for reproducibility

from tensorflow.keras.layers import (Activation, Convolution2D, Dense, Dropout,

Flatten, MaxPooling2D)

from tensorflow.keras.models import Sequential

from tensorflow.keras.utils import to_categorical

from tensorflow.keras.datasets import mnist

# Load pre-shuffled MNIST data into train and test sets

(X_train, y_train), (X_test, y_test) = mnist.load_data()

# Reshape data for Tensorflow

X_train = X_train.reshape(-1, 28, 28, 1)

X_test = X_test.reshape(-1, 28, 28, 1)

X_train = X_train.astype("float32")

X_test = X_test.astype("float32")

X_train /= 255

X_test /= 255

# Convert 1-dimensional class arrays to 10-dimensional class matrices

Y_train = to_categorical(y_train, 10)

Y_test = to_categorical(y_test, 10)

# define model

model = Sequential()

# declare input layer

model.add(Convolution2D(32, (3, 3), activation="relu", input_shape=(28, 28, 1)))

# add more layers

model.add(Convolution2D(32, (3, 3), activation="relu"))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

# and even more layers

model.add(Flatten())

model.add(Dense(128, activation="relu"))

model.add(Dropout(0.5))

model.add(Dense(10, activation="softmax"))

model.summary()

# compile model and train

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])

model.fit(X_train, Y_train, epochs=4, batch_size=32, verbose=1)

score = model.evaluate(X_test, Y_test, verbose=0)

print("\n%s: %.2f%%" % (model.metrics_names[1], score[1] * 100))

# save model

model.save("models-repository/mnist/1/model.savedmodel")

Overwriting train.py

Train Model¶

[8]:

!./venv/bin/python3 train.py

2021-11-09 00:16:11.767552: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:11.774042: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:11.774341: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:11.774801: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-11-09 00:16:11.775503: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:11.775756: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:11.775996: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:12.252515: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:12.252816: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:12.253061: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2021-11-09 00:16:12.253297: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1510] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 1356 MB memory: -> device: 0, name: NVIDIA GeForce GTX 1650, pci bus id: 0000:01:00.0, compute capability: 7.5

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

conv2d_1 (Conv2D) (None, 24, 24, 32) 9248

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 12, 12, 32) 0

_________________________________________________________________

dropout (Dropout) (None, 12, 12, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 4608) 0

_________________________________________________________________

dense (Dense) (None, 128) 589952

_________________________________________________________________

dropout_1 (Dropout) (None, 128) 0

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 600,810

Trainable params: 600,810

Non-trainable params: 0

_________________________________________________________________

2021-11-09 00:16:12.553857: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

Epoch 1/4

2021-11-09 00:16:13.375537: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8101

1875/1875 [==============================] - 8s 3ms/step - loss: 0.2046 - accuracy: 0.9378

Epoch 2/4

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0872 - accuracy: 0.9742

Epoch 3/4

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0676 - accuracy: 0.9791

Epoch 4/4

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0546 - accuracy: 0.9833

accuracy: 98.98%

2021-11-09 00:16:38.051127: W tensorflow/python/util/util.cc:348] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

Deploy and Test¶

Configure Triton Server¶

[9]:

%%writefile models-repository/mnist/config.pbtxt

name: "mnist"

platform: "tensorflow_savedmodel"

max_batch_size: 100

dynamic_batching { preferred_batch_size: [ 50 ]}

instance_group [ { count: 2 }]

input [

{

name: "conv2d_input"

data_type: TYPE_FP32

dims: [ 28, 28, 1 ]

}

]

output [

{

name: "dense_1"

data_type: TYPE_FP32

dims: [ 10 ]

}

]

Overwriting models-repository/mnist/config.pbtxt

Copy model into MinIO instance¶

[10]:

!tree models-repository/

models-repository/

└── mnist

├── 1

│ └── model.savedmodel

│ ├── assets

│ ├── keras_metadata.pb

│ ├── saved_model.pb

│ └── variables

│ ├── variables.data-00000-of-00001

│ └── variables.index

└── config.pbtxt

5 directories, 5 files

[11]:

!rclone -vvv --config="rclone.conf" copy models-repository s3://triton-models/mnist-model

2021/11/09 00:16:39 DEBUG : rclone: Version "v1.56.2" starting with parameters ["/home/rskolasinski/.asdf/installs/rclone/1.56.2/bin/rclone" "-vvv" "--config=rclone.conf" "copy" "models-repository" "s3://triton-models/mnist-model"]

2021/11/09 00:16:39 DEBUG : Creating backend with remote "models-repository"

2021/11/09 00:16:39 DEBUG : Using config file from "/home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/rclone.conf"

2021/11/09 00:16:39 DEBUG : fs cache: renaming cache item "models-repository" to be canonical "/home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/models-repository"

2021/11/09 00:16:39 DEBUG : Creating backend with remote "s3://triton-models/mnist-model"

2021/11/09 00:16:39 DEBUG : fs cache: renaming cache item "s3://triton-models/mnist-model" to be canonical "s3:triton-models/mnist-model"

2021/11/09 00:16:39 DEBUG : mnist/config.pbtxt: Modification times differ by -8m54.901977129s: 2021-11-09 00:16:39.278686721 +0000 GMT, 2021-11-09 00:07:44.376709592 +0000 GMT

2021/11/09 00:16:39 DEBUG : mnist/config.pbtxt: md5 = 8bf5c24a18dff3603cf2cdcbd5d5e929 OK

2021/11/09 00:16:39 DEBUG : S3 bucket triton-models path mnist-model: Waiting for checks to finish

2021/11/09 00:16:39 INFO : mnist/config.pbtxt: Updated modification time in destination

2021/11/09 00:16:39 DEBUG : mnist/config.pbtxt: Unchanged skipping

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/keras_metadata.pb: Modification times differ by -54m41.948759938s: 2021-11-09 00:16:38.51069757 +0000 GMT, 2021-11-08 23:21:56.561937632 +0000 GMT

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/saved_model.pb: Modification times differ by -54m41.948760136s: 2021-11-09 00:16:38.49869774 +0000 GMT, 2021-11-08 23:21:56.549937604 +0000 GMT

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/keras_metadata.pb: md5 = a4c5251702f32808e94e0d2b89a85aec OK

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.index: Modification times differ by -54m41.956760288s: 2021-11-09 00:16:38.490697853 +0000 GMT, 2021-11-08 23:21:56.533937565 +0000 GMT

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.index: md5 = 6e5f3d005dbe11b90507f2865a1a0713 (Local file system at /home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/models-repository)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.index: md5 = be8df29950bcde41116269ed7b7ac6a2 (S3 bucket triton-models path mnist-model)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.index: md5 differ

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/saved_model.pb: md5 = 936afcc716e6828695b1a11ad5a0d691 (Local file system at /home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/models-repository)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/saved_model.pb: md5 = dcc8acd1b44e285751f93d4f3499decd (S3 bucket triton-models path mnist-model)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/saved_model.pb: md5 differ

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: Modification times differ by -54m41.960760297s: 2021-11-09 00:16:38.490697853 +0000 GMT, 2021-11-08 23:21:56.529937556 +0000 GMT

2021/11/09 00:16:39 INFO : mnist/1/model.savedmodel/keras_metadata.pb: Updated modification time in destination

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/keras_metadata.pb: Unchanged skipping

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/saved_model.pb: md5 = 936afcc716e6828695b1a11ad5a0d691 OK

2021/11/09 00:16:39 INFO : mnist/1/model.savedmodel/saved_model.pb: Copied (replaced existing)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.index: md5 = 6e5f3d005dbe11b90507f2865a1a0713 OK

2021/11/09 00:16:39 INFO : mnist/1/model.savedmodel/variables/variables.index: Copied (replaced existing)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: md5 = 254dd0a480879c42a9ec648b6f1fabf4 (Local file system at /home/rskolasinski/work/seldon-core-mnist-triton/examples/mnist_triton_e2e/models-repository)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: md5 = 176268afe35d0445761ce3c0698f4d16 (S3 bucket triton-models path mnist-model)

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: md5 differ

2021/11/09 00:16:39 DEBUG : S3 bucket triton-models path mnist-model: Waiting for transfers to finish

2021/11/09 00:16:39 DEBUG : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: md5 = 254dd0a480879c42a9ec648b6f1fabf4 OK

2021/11/09 00:16:39 INFO : mnist/1/model.savedmodel/variables/variables.data-00000-of-00001: Copied (replaced existing)

2021/11/09 00:16:39 INFO :

Transferred: 7.026Mi / 7.026 MiByte, 100%, 0 Byte/s, ETA -

Checks: 5 / 5, 100%

Transferred: 3 / 3, 100%

Elapsed time: 0.0s

2021/11/09 00:16:39 DEBUG : 15 go routines active

Deploy¶

[12]:

%%writefile deployment.yaml

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: mnist

namespace: seldon

spec:

name: default

predictors:

- graph:

implementation: TRITON_SERVER

logger:

mode: all

modelUri: s3:triton-models/mnist-model

envSecretRefName: seldon-rclone-secret

name: mnist

type: MODEL

name: default

replicas: 1

protocol: kfserving

Overwriting deployment.yaml

[13]:

!kubectl apply -f deployment.yaml

seldondeployment.machinelearning.seldon.io/mnist unchanged

[14]:

!kubectl rollout status deploy/$(kubectl get deploy -l seldon-deployment-id=mnist -o jsonpath='{.items[0].metadata.name}')

deployment "mnist-default-0-mnist" successfully rolled out

Test (dummy data)¶

[15]:

import numpy as np

import requests

[16]:

!curl -s http://localhost:8003/seldon/seldon/mnist/v2/models/mnist | jq .

{

"name": "mnist",

"versions": [

"1"

],

"platform": "tensorflow_savedmodel",

"inputs": [

{

"name": "conv2d_input",

"datatype": "FP32",

"shape": [

-1,

28,

28,

1

]

}

],

"outputs": [

{

"name": "dense_1",

"datatype": "FP32",

"shape": [

-1,

10

]

}

]

}

[17]:

URL = "http://localhost:8003/seldon/seldon/mnist"

def predict(data):

data = {

"inputs": [

{

"name": "conv2d_input",

"data": data.tolist(),

"datatype": "FP32",

"shape": data.shape,

}

]

}

r = requests.post(f"{URL}/v2/models/mnist/infer", json=data)

predictions = np.array(r.json()["outputs"][0]["data"]).reshape(

r.json()["outputs"][0]["shape"]

)

output = [np.argmax(x) for x in predictions]

return output

[18]:

output = predict(np.random.rand(10, 28, 28, 1))

output

[18]:

[8, 8, 8, 8, 2, 8, 8, 8, 8, 3]

Test (real data - optional)¶

[19]:

import matplotlib.pyplot as plt

from tensorflow.keras.datasets import mnist

from tensorflow.keras.utils import to_categorical

2021-11-09 00:16:41.193198: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

[20]:

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_test = X_test.reshape(-1, 28, 28, 1)

X_test = X_test.astype("float32")

X_test /= 255

Y_test = to_categorical(y_test, 10)

[21]:

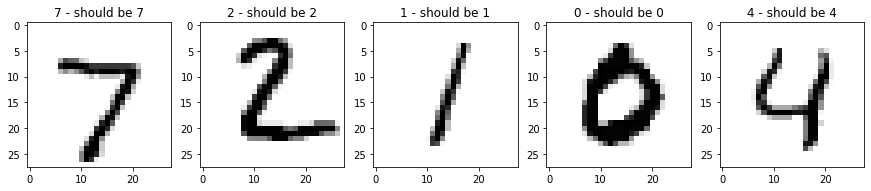

predictions = predict(X_test[:5])

fig, axs = plt.subplots(1, 5, figsize=(15, 3))

for i in range(5):

axs[i].imshow(X_test[i, :, :, 0], cmap="binary")

axs[i].set_title("{} - should be {}".format(predictions[i], np.argmax(Y_test[i])))